Think your AI system is fully compliant? Think again.

In today’s race to integrate artificial intelligence into everyday operations, businesses often charge ahead, excited by the promise of speed, scale, and automation. But amid the rush, one critical detail is getting buried: compliance.

From algorithmic bias and data privacy to emerging global regulations, the risks tied to AI are evolving faster than many legal teams can keep up with. And the truth is, most organizations are unprepared for the complexity.

That’s where outsourcing AI compliance comes in.

This blog unpacks the often-overlooked compliance pitfalls associated with adopting AI and why your in-house resources might not be enough to handle them. We’ll explore how outsourcing partners can bring specialized knowledge, scalable support, and fresh eyes to help businesses navigate the legal gray zones of AI before they become costly missteps.

AI Tools Introduce a New Class of Compliance Risks

AI tools introduce a new class of compliance risks that go far beyond traditional data privacy concerns.

Most business leaders know laws like the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA). These frameworks focus on safeguarding personal data and ensuring informed consent. But with AI, the risk landscape changes dramatically — and often unpredictably.

AI systems today are no longer just handling data; they’re making high-stakes decisions. These decisions can carry profound legal, ethical, and societal implications, especially when they’re made without transparency or human oversight. Even the rise of AI-driven compliance automation, while helpful, introduces its complexities if not correctly implemented or understood.

Algorithmic Decision-Making Under Legal Scrutiny

Whether it’s loan approvals, medical recommendations, or hiring decisions, AI is increasingly making choices that directly affect individuals. The problem? Your company could face legal action or regulatory fines if those choices are biased, inaccurate, or unexplainable. There’s growing scrutiny from governments and watchdog groups questioning how AI decisions are made and whether they uphold anti-discrimination laws.

Black-Box Models That Can’t Be Audited

Many modern AI systems, especially deep learning models, are so complex that even their developers can’t fully explain how they arrive at specific outcomes. These “black-box” models pose a serious auditability problem. If you can’t explain how your AI came to a decision, you can’t defend it during a compliance audit or legal dispute.

Global Inconsistencies in AI Regulation

Adding to the challenge is the patchwork of regulations emerging across jurisdictions. For instance, the EU’s AI Act takes a risk-based approach, while the U.S. sees a mixture of state-led policies. What’s compliant in one region may not pass muster in another. For global businesses, this creates a minefield of legal inconsistencies that are difficult to navigate with internal resources alone.

These risks are compounded by how fast AI is being implemented, often without parallel compliance planning.

Fast-Scaling Companies Often Lack the Internal Resources

Fast-scaling companies often lack the internal resources to monitor AI compliance effectively.

Innovation tends to outpace regulation, and nowhere is this more evident than in the world of AI. Legal teams are stretched thin trying to interpret evolving legislation, while IT and product teams are laser-focused on developing AI capabilities. The result? Compliance becomes an afterthought.

Conflicting Priorities Between Innovation and Regulation

Internal tensions often arise between the drive to innovate and the need to comply. Business units want to move fast, iterate, and deploy — but compliance officers and legal departments need checks, documentation, and reviews that take time. These competing priorities often leave companies vulnerable.

Limited In-House Expertise in AI Governance

Few legal or IT professionals are fully trained in the intricacies of AI governance. It’s a niche field that requires understanding technology and policy. Without specialized expertise, internal teams may miss red flags or struggle to interpret new compliance requirements as they emerge.

Reactive Rather Than Proactive Compliance Posture

Instead of building compliance into AI development from the start, many companies take a reactive approach. They address risks only after problems arise — when it’s too late. This creates liability exposure and reputational damage that could have been avoided with the right processes.

To stay ahead, companies need specialized help, which is where outsourcing becomes essential.

Outsourcing AI Compliance Support Reduces Internal Burdens

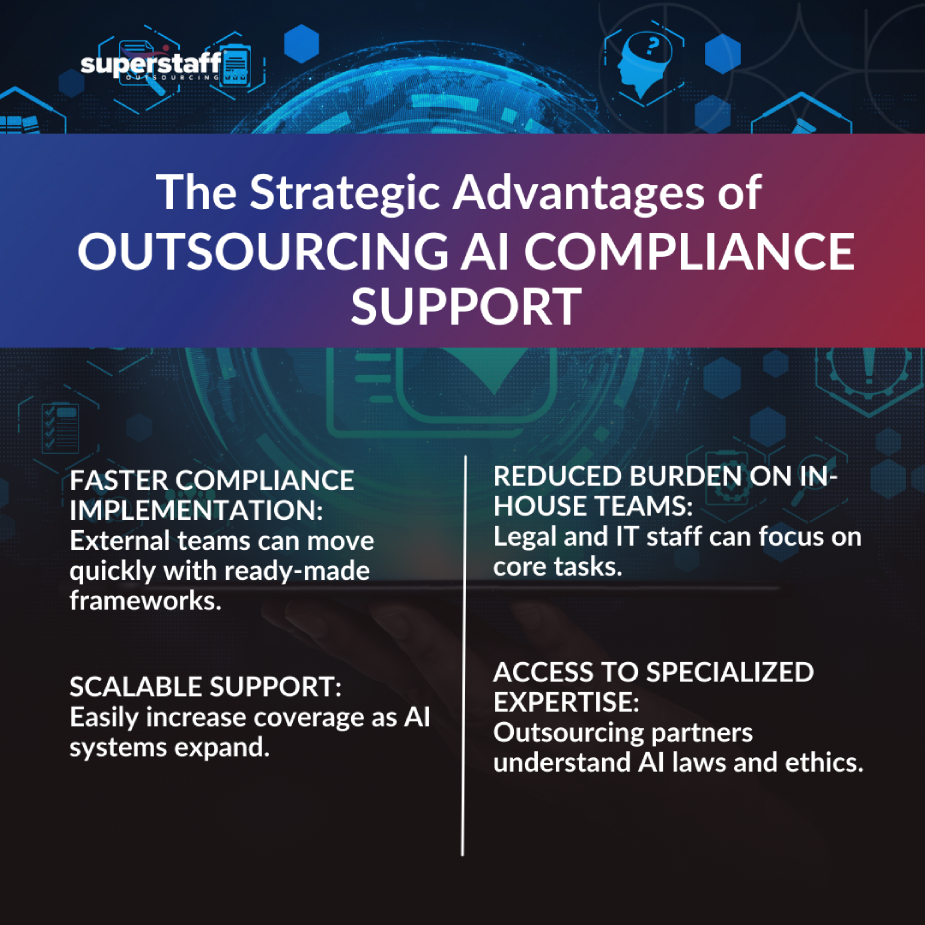

As AI adoption grows more complex, in-house legal and IT departments often struggle to keep up. That’s where outsourcing becomes a strategic advantage. Partnering with AI-literate outsourcing providers gives organizations scalable, cost-effective solutions tailored to meet fast-evolving regulatory demands.

These partners go beyond basic compliance checks — they offer end-to-end assistance with risk assessments, documentation, internal audits, and more. One of the most overlooked benefits is how outsourcing helps with AI governance: By embedding external experts into your compliance workflows, you gain consistent oversight, policy enforcement, and accountability structures that keep your AI systems legally and ethically sound.

From deployment to monitoring, outsourcing ensures every stage of your AI lifecycle aligns with global regulations, without exhausting your internal resources.

Offshore Teams Trained in AI Risk Monitoring and Policy Enforcement

Certain BPOs (Business Process Outsourcing firms) now offer teams specifically trained to manage AI-related risks. These professionals are familiar with regulatory frameworks, AI audit standards, and data governance protocols. They can provide round-the-clock monitoring and flag potential compliance issues before they escalate.

Support for Global Regulatory Filings and Cross-Border Governance

With global AI regulations diverging, companies must often file separate reports or meet distinct standards per region. Outsourcing partners can manage cross-border data governance tasks, ensuring that your AI systems comply with local laws, wherever you operate.

Compliance Hotlines and Reporting Support Handled Externally

Whistleblower hotlines, incident reporting systems, and compliance dashboards are critical to maintaining transparency. Rather than managing these in-house, many companies delegate them to outsourcing providers, gaining neutral oversight and quicker turnaround.

With outsourcing, businesses can scale AI confidently while staying aligned with legal expectations.

Outsourcing Also Enables Ethical AI Use

Legal compliance is only one side of the coin. Ethical concerns — particularly around AI bias, discrimination, and fairness — are just as critical. Even if an AI decision technically meets regulatory requirements, it may fall short of public expectations or violate a company’s core values. That’s why many organizations are incorporating AI risk management strategies into their governance frameworks, and outsourcing plays a central role in making those strategies work.

External QA Teams Reviewing AI Outputs for Fairness and Bias

Dedicated quality assurance (QA) teams can perform human-in-the-loop audits, analyzing how AI systems treat different demographics or input types. This human oversight helps catch unfair patterns or biases that might otherwise slip through automated testing.

Documentation and Reporting Support for Ethical Review Boards

For industries like healthcare, finance, or education, companies often have ethics committees or review boards. Outsourcing partners can provide the documentation and impact reports needed to evaluate and improve AI ethics standards.

Cultural Context and Multilingual Oversight for Global Deployments

AI doesn’t operate in a vacuum. Its decisions are interpreted through local cultures and languages. BPO teams with multilingual and multicultural fluency can better review AI outcomes in context, ensuring appropriateness and fairness across regions.

But not all outsourcing partners are prepared for this complexity — selection is key.

Best Strategies for Managing AI Ethics and Compliance Through Outsourcing: Choose the Right Partner for Long-Term Protection

Choosing an outsourcing partner with AI compliance expertise ensures long-term protection. Not every outsourcing firm is equipped to handle the nuances of AI governance. Business leaders must vet their providers carefully, looking for proven experience in AI-specific compliance domains.

Certifications in Data Security and Privacy (e.g., ISO, SOC 2)

At a minimum, your partner should hold certifications demonstrating a strong data governance foundation. Look for ISO 27001, SOC 2, and other privacy/security standards that reflect an organizational commitment to confidentiality and compliance.

Familiarity With Sector-Specific Regulations

AI risks look different depending on the industry. In finance, it’s about fair lending. In healthcare, it’s clinical accuracy and patient privacy. A strong outsourcing partner should be familiar with your sector’s unique compliance environment and ready to tailor support accordingly.

Ability To Scale With Your AI Roadmap

AI compliance isn’t static. Your partner should have the operational flexibility to support your evolving needs, from pilot projects to enterprise-wide rollouts. This means offering not just headcount but strategic insight and long-term collaboration. With the right partner, compliance becomes an enabler, not a blocker, of innovation.

Build a Future-Ready AI Compliance Strategy

In the AI era, compliance is evolving fast, and outsourcing is a smart way to keep up without slowing down.

AI adoption is accelerating, but so are the risks. From algorithmic bias to black-box audits, businesses are entering a new legal frontier for which most internal teams are unprepared. The good news? You don’t have to go it alone.

Outsourcing AI compliance allows companies to delegate the complexity to trained experts while maintaining strategic control. From documentation and ethics reviews to global regulatory filings, outsourcing gives you the tools to scale AI responsibly, without compromising compliance or ethics.

Concerned about hidden compliance risks in your AI strategy? Explore how SuperStaff can help you build a future-ready compliance framework that protects your brand and enables innovation.